Machine identity is becoming the biggest threat to cloud security

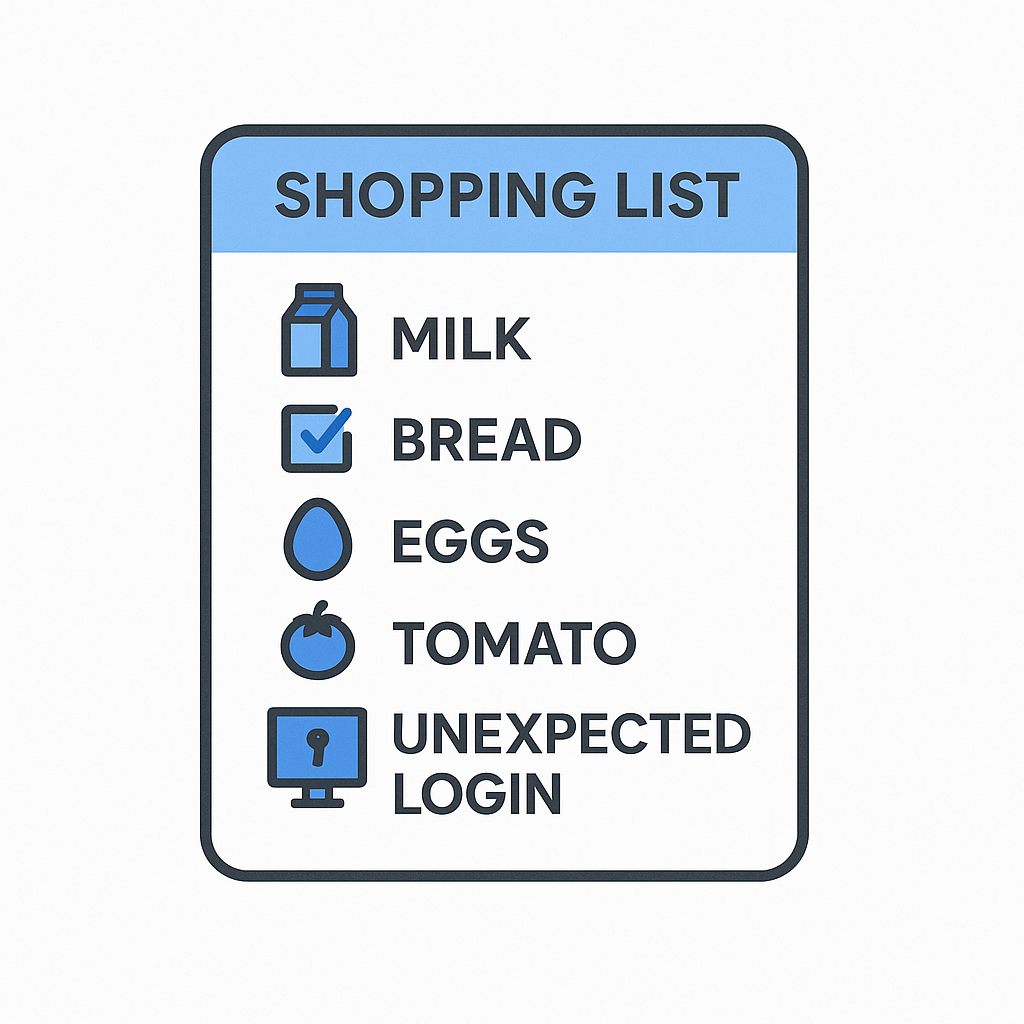

We spend a lot of time worrying about human users: phishing, weak passwords, MFA policies, dodgy laptops on hotel Wi-Fi. But if you look at the big cloud incidents over the last few years, a different pattern emerges.

It’s often not Trevor in Accounts.

It’s not even your developers.

It’s machine identities. A big chunk of the problem is service accounts, tokens, keys, CI/CD pipelines, default roles, and invisible background “plumbing” that nobody ever logs in as, but everything relies on.

Those identities often have more power than any single human, live much longer, and are monitored a lot less. And when they go wrong, they go very, very wrong.

This is where cloud access really falls apart.

Human vs. Machine Identity: What’s the Difference, Really?

Human identities are obvious: employees, contractors, admins. They sign in via SSO, hit your IdP, get assigned roles, and (hopefully) have MFA turned on.

Machine identities are everything else:

- cloud service accounts

- CI/CD tokens and deployment keys

- API keys used by third-party tools

- default identities created by cloud providers

- certificates and signing keys

- AI agents

None of them has HR records. They don’t leave the company. They don’t grumble or raise tickets when you give them excessive privilege. And they’re exactly what attackers love to steal, replay, or piggyback on.

Case Study 1: Dropbox Sign and the “Unremarkable” Service Account

In spring 2024, Dropbox Sign disclosed that attackers had compromised a service account used for backend configuration tooling. It wasn’t a human user; it wasn’t even considered a likely attack path. That service account turned out to be the key into production systems. Once inside, attackers could access customer data and documents tied into the e-signature platform.

The lesson is simple: if an over-privileged machine identity can reach production, you are one misconfiguration away from a headline.

Case Study 2: CircleCI: One Token, Massive Blast Radius

In January 2023, CircleCI reported a breach after an infostealer on an engineer’s laptop led to theft of an access token used in their infrastructure. That token gave attackers access to sensitive internal systems, including encryption keys, and put customers’ CI/CD secrets at risk. CircleCI told customers to rotate all tokens, environment variables, SSH keys, and API credentials stored in their platform.

This was not about a single user account being popped. The real damage came from long-lived, powerful non-human credentials that had reach into many customers’ environments.

If your build system holds API keys and service credentials for multiple clouds and SaaS platforms, a compromise of that system is effectively a compromise of every machine identity it manages.

Case Study 3: Capital One: When a Role Becomes the Breach

The 2019 Capital One breach is often described as “a WAF misconfiguration,” but the real pivot point was access to an AWS IAM role. An SSRF attack let the attacker trick the instance into calling the metadata service, returning temporary IAM credentials for an over-privileged role. Those machine credentials were then used to list and read S3 buckets, leading to the theft of around 100 million customer records.

Again, the attacker didn’t need a username and password. They needed a machine identity that could do far more than it should.

Case Study 4: GCP Default Service Accounts: Lateral Movement by Design

Researchers have shown how an over-permissive default service account in Google Cloud can be used for lateral movement across Compute Engine instances. If that account has certain IAM permissions, compromising just one can give an attacker effective control of the whole project.

This isn’t a one-off misstep; it’s a common pattern. Defaults are generous, nobody revisits them, and machine identities quietly become the easiest route to “own it all”.

What These Incidents Have in Common

Across all of these examples, you see the same themes:

- Non-human identities with broad, persistent permissions

- Little visibility into who or what is using those credentials day to day

- Weak lifecycle management: credentials that live too long or never get revisited

- Cross-environment reach: access into multiple systems, environments, or tenants

- Human-centric controls (MFA, phishing awareness, SSO policies) that don’t apply

- No behavioral monitoring for machine activity, allowing compromised identities to operate undetected

This is the core problem: most organizations built their security model around human access, then quietly handed more power to machines, without giving themselves the same control, context, and oversight.

What Good Looks Like: Identity-First, Not User-First

Fixing this isn’t about another dashboard of “risky users.” It’s about treating human and machine identities as first-class citizens and managing them together.

In practice, that means a platform that can:

Inventory everything across clouds

One place to see all identities — users, service accounts, roles, tokens, keys — across AWS, Azure, GCP, and your IdP. Not three consoles and a spreadsheet, but one normalized view of “who or what can do what, and where.”

Show real permission paths

What permissions does a service account actually use? How does this compare to the actions it takes? Is your platform going to give you the actual permission paths your org needs?

Apply just-in-time access to machines as well as humans

It’s not enough to give developers JIT admin and call it a day. The same model should extend to machine identities:

- ephemeral credentials instead of long-lived keys

- tightly scoped roles per task or pipeline

- automatic expiry and rotation rather than manual clean-up

Anchor everything in simple, explainable policies

Security teams and engineers should be able to read policies in plain English: which workloads can access which resources, and under what conditions? If you need a PhD in IAM to understand a policy, no one will maintain it.

Integrate with how teams actually work

Approvals and access requests — for humans and machines — should run through Slack or Teams, not a forgotten portal. When developers can request elevated pipeline rights or temporary roles via chat, you get control without slowing delivery.

Be usable without a six-month project

This has to be plug-in-and-go: connect the clouds, pull the identities, show the risks. If it needs professional services and a war room to get value, it will stall, and the machine identities will continue doing whatever they like.

Some Thoughts on AI Agents

AI agents are quickly becoming one of the most powerful and least understood machine identities in a modern cloud estate. Accoding to Gartner, by 2030, over 40% of organisations worldwide will face security and compliance incidents caused by unauthorised AI tools.They hold keys, tokens, and API scopes just like any service account, but they behave more like autonomous users: retrieving data, initiating workflows, updating systems, and making decisions at machine speed. That combination of privilege and autonomy creates a new kind of risk. Suppose an attacker can influence an agent’s inputs, steal its credentials, or exploit one of its integrations. In that case, they can drive the AI to perform actions on their behalf using its legitimate permissions.

This makes AI agents both an operational asset and an emerging lateral-movement vector. Treating them as first-class identities, and prioritizing AI security with JIT access, scoped permissions, clear visibility, and continuous monitoring, is essential if AI is going to support your cloud in the future - rather than accidentally compromise it.

Additionally, an AI agent doesn’t need to be compromised to become a security risk. It only needs to make a confident decision that happens to be wrong. With the wrong permissions or too much autonomy, an AI can over-grant access, deploy insecure configurations, silence alerts, or expose data while trying to “help,” creating the same impact as an attacker but without any malicious intent. The future is, honestly, only in our hands if we take control.

Case Study 5: Autonomous AI Assistant: When “Helpful” Becomes Harmful

Let’s say, hypothetically, as enterprises began deploying internal AI agents to automate cloud tasks, one organization granted its assistant broad standing permissions across AWS and Azure to avoid interrupting developer workflows. During a routine request, the AI misinterpreted an instruction to “clean up unused resources” and executed a cross-cloud sweep using its legitimate privileges. In the process, it removed IAM boundary policies, expanded several service account roles, and briefly exposed a storage bucket containing internal configuration metadata.

No attacker was involved. The incident happened because a highly privileged machine identity, the AI assistant, acted autonomously with permissions far wider than intended. The risk wasn’t malicious access. It was authorized access wielded incorrectly.

Thankfully, there are currently no publicly confirmed, well-documented breaches caused directly by an internal AI agent acting autonomously with cloud permissions, because most organizations aren’t giving AIs that level of access yet. The risk is real, the patterns are emerging, but the incident reports aren’t public in the same way as Capital One, CircleCI, or Dropbox Sign.

From “User Security” to “Identity Security”

The uncomfortable truth is that a lot of “cloud security” today is still user security with a thin layer of IAM on top. Meanwhile, your riskiest paths are:

- CI/CD systems holding keys to multiple environments

- third-party integrations using broad API tokens

- cloud defaults that were never tightened

- backend service accounts nobody looks at

- AI agents operating with broad, unmonitored permissions that can be hijacked, manipulated, or tricked into performing privileged actions on command

That’s where attackers are winning.

If you treat machine identities with the same care, context, and control as humans, with multi-cloud visibility, just-in-time access, simple workflows, and clear graphs of who can reach what, you start to close off the paths that made Dropbox Sign, CircleCI, and Capital One possible in the first place.

Humans might still click on dodgy links as they become desensitized to cyberattacks. But they’ll have far fewer invisible, over-privileged machines waiting to make a bad day much worse.