Put agentic AI agents on a short leash, and give them receipts

Your developers have hired a new colleague, and they're not part of the usual joiner–mover–leaver HR lifecycle.

They don’t sleep. They don’t take lunch. They can open pull requests, change Terraform, query production logs, and “helpfully” fix things in your cloud at 03:13 am.

They’re also extremely easy to trick.

That’s the difference between GenAI and agentic AI: chatbots suggest; agents act. Which means your biggest risk stops being “bad answers” and becomes bad actions, performed at near-instant machine speed with whatever permissions we accidentally left lying around. Looking at this objectively, as a user, it’s kinda terrifying.

And yes, this is already happening. Stack Overflow’s 2025 survey found 84% of respondents are using or planning to use AI tools in development, and 51% of professional developers use them daily. JetBrains’ 2025 report puts regular AI use in dev at 85%, with 62% relying on at least one AI coding assistant/agent/editor.

So, no: you can’t “ban agents” and call it governance. That’s going to stifle innovation and throttle competitiveness. You need to make them safe without turning delivery into some complicated ticketing drinking game.

Why Agentic AI Breaks Usual Security Assumptions

Security teams are used to two tidy categories:

- Humans (messy, slow, occasionally brilliant).

- Machines (predictable, scripted, and hopefully least-privileged).

Agents are neither. They’re goal-seeking workflows that read inputs, decide steps, and call tools. That creates three uncomfortable realities:

1) Prompt injection becomes a permissions problem

If an agent reads untrusted content (issues, docs, emails, web pages) and has tool access, attackers don’t need an exploit. They need a sentence.

Our good friend and Trustle advisor Bruce Schneier’s recent take is blunt: vendors can block specific prompt injection tricks as they’re discovered, but universal prevention is not achievable with today’s LLMs. In other words: if you’re betting your cloud on “the model won’t fall for it,” I’ve a bridge in London to sell you.

2) “Tools” are the new attack surface

OWASP calls out that agentic systems introduce distinct failure modes like tool misuse/exploitation and prompt injection, and need dedicated mitigations.

This isn’t theoretical. Recent reporting highlighted research into serious vulnerabilities across AI-assisted dev tools and IDE workflows (“IDEsaster”), enabling things like data theft and even remote code execution in some setups, often via the way agents interact with “normal” IDE capabilities.

3) The biggest risk is boring: standing privilege

Agents thrive on convenience. Convenience loves permanent access. Permanent access loves being breached.

In cloud, this shows up as:

- Over-privileged roles “just for now”

- Long-lived API keys in CI/CD

- Shared service accounts

- Orphaned identities no one dares delete

- OAuth apps and tokens that quietly accumulate power

If we give an agent admin once, we’ve basically made it a permanent internal contractor with superpowers and no HR file.

The Goal: Keep Innovation Fast, Make Access Temporary

Here’s the mindset shift that actually works:

Treat agents like privileged identities.

Then make privileged access time-bound, scoped, and provable.

This is where modern cloud identity security earns its keep. You want a platform that can:

- discover and normalize entitlements across AWS/Azure/GCP,

- identify over-privilege and risky access paths,

- broker just-in-time elevation with tight scopes,

- automatically revoke access on expiry,

- and produce audit-grade evidence of “who/what accessed what, when, and why.”

Practically, that looks like the “time-boxed, auto-revoked privilege” pattern (especially useful when an agent needs elevated access for 10 minutes to complete a bounded job).

A Pragmatic Control Set

You don’t need a 40-page “Agent Policy.” You need a handful of controls that map to reality.

1) Make agents first-class identities

- Separate identities per agent/workflow (no shared “ai-bot-admin” monstrosities).

- Strong authentication and clean ownership (someone is accountable when it goes weird).

- Environment separation (dev agents don’t casually wander into prod).

2) Zero standing privilege, by default

This is the keystone. With ZSP, you keep teams fast and reduce blast radius because elevation is the exception, and it evaporates automatically.

3) Just-in-time access with policy-based approvals

Not “security theater approvals.” Real, low-friction approvals that happen where teams already work (Slack or Teams), so the dev experience doesn’t implode.

This also fixes the perennial problem of missing context. In-channel approvals can include the resource, scope, duration, request reason, and who approved it, creating squeaky-clean access trails without having to beg people to fill out tickets.

4) Tool allowlists + step-up for dangerous actions

Agents should have an allowlist of tools/actions. Anything destructive (IAM changes, key rotation, prod data ops, blah) should trigger step-up controls: human approval, breakglass, or both.

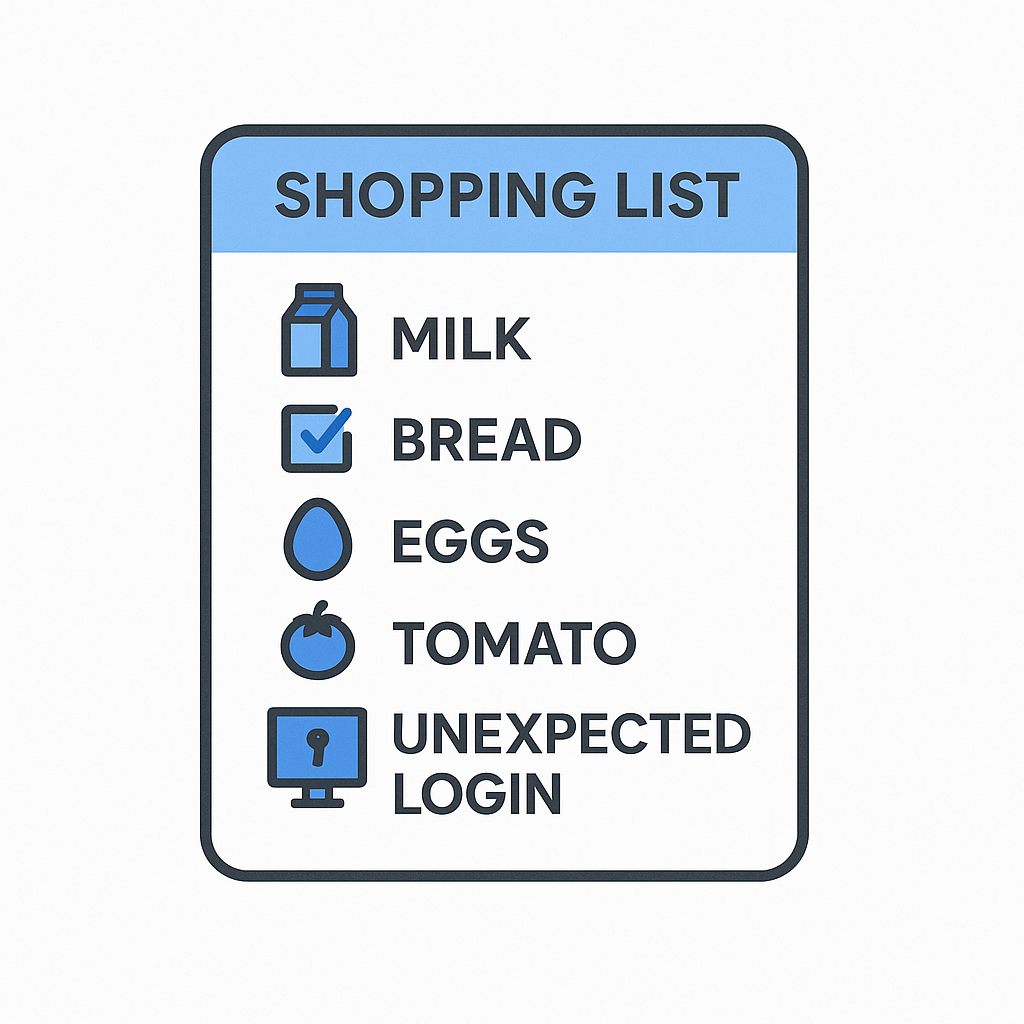

5) “Access receipts” as a design requirement

If you can’t answer “what did the agent do?” in minutes, you don’t have agentic AI, you have an incident generator.

This is why enterprises keep stalling at the pilot stage. A Dynatrace study reported that about half of agentic AI projects are stuck in pilot/POC, with 52% citing security/privacy/compliance as a top barrier. Observability and traceability aren’t nice-to-haves; they’re the admission ticket.

Governance That Helps, Not Hinders

If you need “framework gravity” for internal alignment, you’ve got options:

- NIST AI RMF 1.0 for lifecycle risk thinking (Map, Measure, Manage, Govern).

- ISO/IEC 42001 if your organization wants an AI management system standard that looks familiar to ISO 27001 people.

- OWASP’s agentic guidance for practical, build-level mitigations.

But don’t confuse governance with controls. Policies don’t stop agentic blast radius. Access design does.

At The Speed of Innovation

Agentic AI will speed up development. It will, alas, also speed up mistakes.

The SOCs' job isn’t to slow innovation. It’s to make sure our agents can’t quietly accumulate power, wander off-script, and do something “helpful” that costs you a quarter’s worth of sleep.

Give agents temporary, scoped access. Route approvals through the workflow. Revoke automatically. Log everything. Ship faster and sleep better.

And if anyone complains this is “too much process,” remind them: it’s not process, it’s just keeping the robots on a tight reign. With receipts.