NVIDIA just published a warning. Identity teams should pay attention...

NVIDIA’s new Agentic AI Security Framework arrived this week with the energy of someone tapping us on the shoulder and whispering, “You might want to sit down for this.” Their research outlines, in suitable technical prose, the many ways autonomous AI systems can be misled, manipulated, compromised, or simply wander off into unsafe territory under their own steam.

It’s not theoretical. And it’s not niche. This is a continued emphasis on how AI is behaving like actual users inside our systems, complete with access, credentials, cybersecurity passwords, and the capacity to cause both delight and carnage.

This is yet another timely reminder that identity is always where the interesting problems gather. The cloud made machines into users. AI is quietly tapping into APIs and performing tasks with a confidence and frequency rarely matched by humans or change-control boards.

What NVIDIA’s Framework Is Really Saying

Agentic AI isn’t your standard “chatbot that sometimes overshares.” It’s a system that plans tasks, selects tools, executes workflows, and adapts based on context. As soon as something can act, not just generate text, it becomes a security principle.

NVIDIA specifically highlights several classes of risk:

- Prompt and memory poisoning: Feed the agent bad information, and it will make bad decisions, with admirable enthusiasm.

- Tool misuse: It uses the right tool… in the wrong place. Picture a shell-script-happy intern who never learned fear.

- Permission overreach: The agent has more access than it needs, or keeps access longer than it should.

- Data leakage: Sensitive information rattles out through unintended channels.

- Multi-agent confusion or collusion: Yes, the agents can trick each other. Because of course they can.

The underlying message isn’t subtle: if you test only prompts and outputs, you’re missing the point. The real danger sits in the workflows, where decisions meet permissions.

AI Agents = A New Tier of Identity

For years, we’ve warned that non-human identities were the silent growth vector in cloud estates. Service accounts, CI/CD bots, ephemeral jobs, and OAuth grants, all multiplying like veritable Gremlins left unsupervised near a water source.

Agentic AI is simply the next evolution. But with personality.

Each agent has:

- credentials

- scopes

- behavioral patterns

- access workflows

- a tendency to accumulate permissions like lost socks behind a radiator

In practice, these systems behave like users, but with fewer inhibitions and more persistence. And once attackers realize they can steer these agents, by poisoning context or hijacking credentials, the attack surface widens in uncomfortable ways.

This is why AI safety cannot exist without identity safety. And why identity tools are about to become the unofficial AI safety framework enterprises actually deploy.

The Identity Controls That Make Agentic AI (Somewhat) Safe

AI researchers can talk forever about sandboxing, guardrails, and runtime behavioral monitoring. All useful. All complex. All are still maturing.

But in the real world, inside real organizations, the practical safety line starts with identity governance. If an agent can only access what it needs, only when it needs it, and only under traceable conditions, the blast radius shrinks dramatically.

Here are the controls that map cleanly to NVIDIA’s risk taxonomy:

1. Least Privilege for Synthetic Workers

AI agents should never sit on standing access. Credentials should be narrow. Entitlements should be minimal. Every permission should be intentional. If an agent is tricked, compromised, or simply makes an ill-timed decision at 3 am, the damage is contained.

2. Just-In-Time Access, Not Permanent Keys

Time-boxed elevation is becoming the default for humans. It should also be the default for machines, and especially for autonomous agents. An agent should request access when needed. It should lose that access automatically. And it should never carry dormant privileges that become next month’s compromise. Just-in-time access is the default best practice.

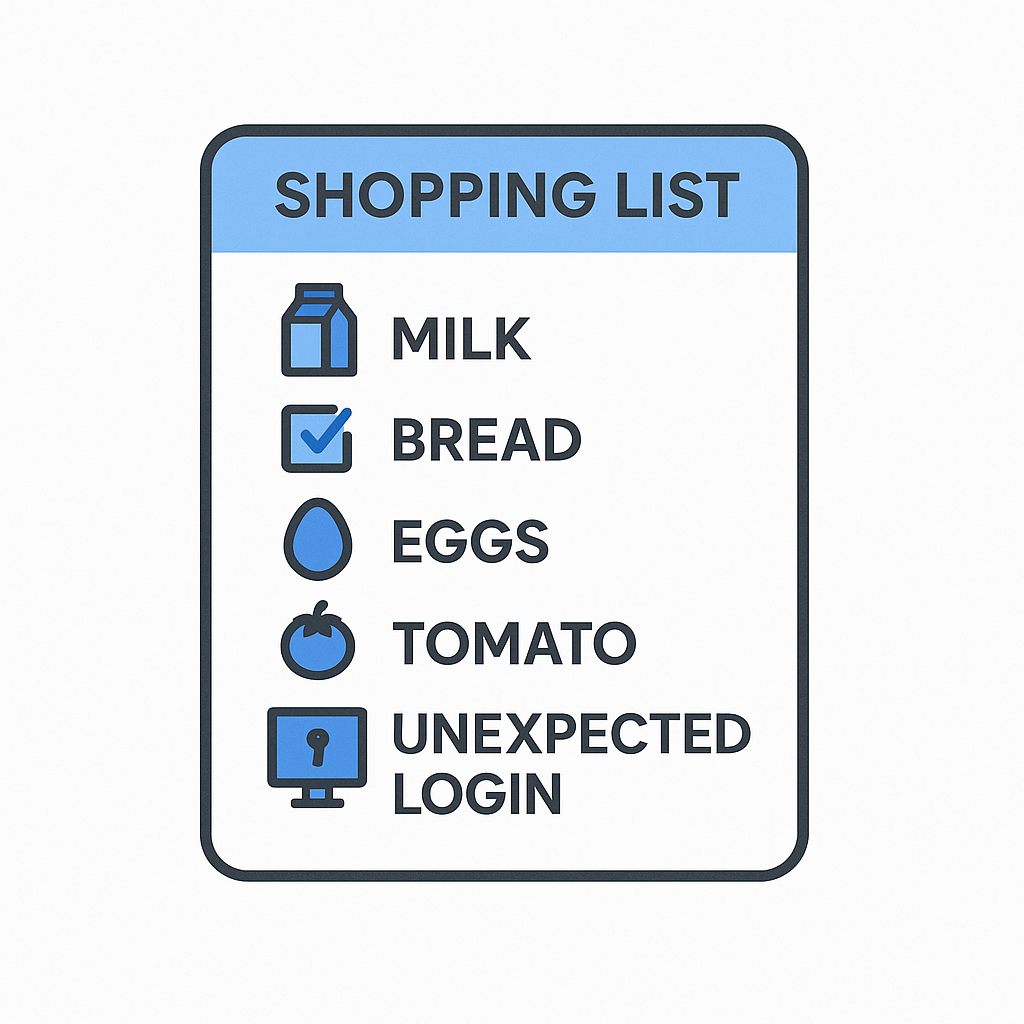

3. Full Visibility Into Who (or What) Has Access

Inventory is the foundation. If you can’t see all your identities, human, machine, synthetic, accidental, you can’t govern them. Agentic AI will accelerate the sprawl. The only sustainable defence is centralized visibility and a standardized access security policy.

4. Audit Trails That Don’t Rely on Human Memory

When a human makes a mistake, you can at least ask them why. When an AI agent misfires, it does so with the serene confidence of a cat knocking a glass of water off a table.

- You need logs.

- You need timestamps.

- You need a forensic breadcrumb trail that explains exactly which action was taken, why, and under which temporary permissions.

5. Fast, Automatic Revocation

AI doesn’t take lunch breaks. It doesn’t step away from the keyboard to grab a coffee. If it starts behaving strangely or dangerously, the ability to revoke access instantly is the difference between “minor incident” and “board meeting.”

Identity: The AI Safety Layer Enterprises Will Actually Deploy

Here’s the pragmatic truth: most organizations will not run advanced LLM sandboxes, adversarial agent simulations, or specialized behavioral monitoring platforms in 2026.

They will invest in identity governance and machine identity management.

Because identity is the lever they already understand.

It’s where audits happen.

It’s where risk is measured.

It’s where privilege and access creep are already fought daily.

Agentic AI introduces a new class of identity, but not a new class of governance. The principles are the same:

- minimize trust

- minimize duration

- minimize ambiguity

- maximize traceability

The tooling is already here. The mindset already exists. Now it just applies to a new type of “user,” one that never sleeps, never gets bored, and occasionally writes bad poetry when you wish it wouldn’t.