Why your smartest new “employee” needs fewer permissions, not more

And that’s precisely the problem.

Now I, for one, love living in the future, but from a security perspective, agentic AI isn’t a clever chatbot. It’s a non-human actor with initiative, persistence, and credentials. Give it broad, standing access, and you haven’t automated work, you’ve automated blast radius.

“I’m sorry, Dave. I’m afraid I can’t do that…”

- HAL 9000, 2001: A Space Odyssey [1968]

CISOs and security architects are starting to clock this. The question is no longer “Can we trust the model?” but “Why on earth does it still have admin rights at 3am?”

Agentic AI Breaks the Old Access Assumptions

Traditional IAM was designed around humans. Even service accounts tend to be dumb, single-purpose, and predictable. Agentic AI is none of those things.

An agent can:

- Chain actions across cloud, SaaS, CI/CD, and security tools

- Act on untrusted inputs (emails, tickets, documents, logs)

- Repeat mistakes at machine speed

- Make decisions that look “reasonable” right up until they aren’t, like, hypothetically, refusing to open the pod bay doors because “the mission” is too critical to be jeopardized by a monkey in a space suit

This is why security researchers increasingly frame agentic AI as a new class of privileged identity, not just an application feature.

Meredith Whittaker, President of the Signal Foundation and leading tech ethics, privacy, and AI accountability advocate, put it bluntly when talking about autonomous AI systems:

“What we’re doing is giving so much control to these systems… almost certainly with something that looks like root permission.” [Business Insider]

That’s the sobering nightmare scenario: an always-on system with initiative, holding keys it doesn’t need for 99% of the time.

The Real Risk Isn’t Intelligence; It’s Entitlement

Most agentic AI failures won’t come from superintelligence. They’ll come from over-permissioning, and so many are hidden away in process orchestration workflows, it’s enough to make your teeth itch.

A few common patterns are already showing up:

- Standing cloud roles because “it needs to be fast”

- Shared service accounts reused across agents and automations

- Direct API access from the model to production systems

- No expiry, no approval, no audit trail because “it’s just automation”

From an attacker’s point of view, this is delightful. Prompt injection, poisoned inputs, or compromised integrations can turn an agent into a very efficient insider threat.

Yoshua Bengio, widely known as one of the “Godfathers of AI,” has repeatedly warned about giving autonomous systems unchecked agency:

“This is the most dangerous path… We need to demonstrate safety before deployment. [Business Insider]

In security terms, “demonstrating safety” means provable controls, not impressions.

What Optimal Access Control Looks Like for Agentic AI

The fix isn’t exotic. It’s the same discipline security teams already apply to humans — just applied properly to machines that can think for themselves.

1. Treat agents as first-class identities

Agents should have their own identities, not borrow a catch-all automation account. That means:

- Clear ownership

- Explicit scopes

- Independent risk signals

If you can’t answer “which agent did this?”, you’ve already lost.

2. Kill standing privilege, use Just-In-Time access

Agentic AI does not need admin rights all day. It needs precise access, briefly, with receipts.

For sensitive actions:

- Issue time-bound privilege

- Auto-expire access

- Require policy-based or human approval for high-risk actions

This aligns perfectly with least-privilege and Zero Standing Privilege models, and it dramatically reduces the blast radius when something goes sideways.

3. Put a policy gate in front of every action

Agents shouldn’t talk directly to powerful systems. Every request should pass through a control plane that evaluates:

- Who/what is asking

- What they want to do

- On which resource

- Under what context and risk

Think Conditional Access, but for tools, APIs, and cloud actions — not just logins.

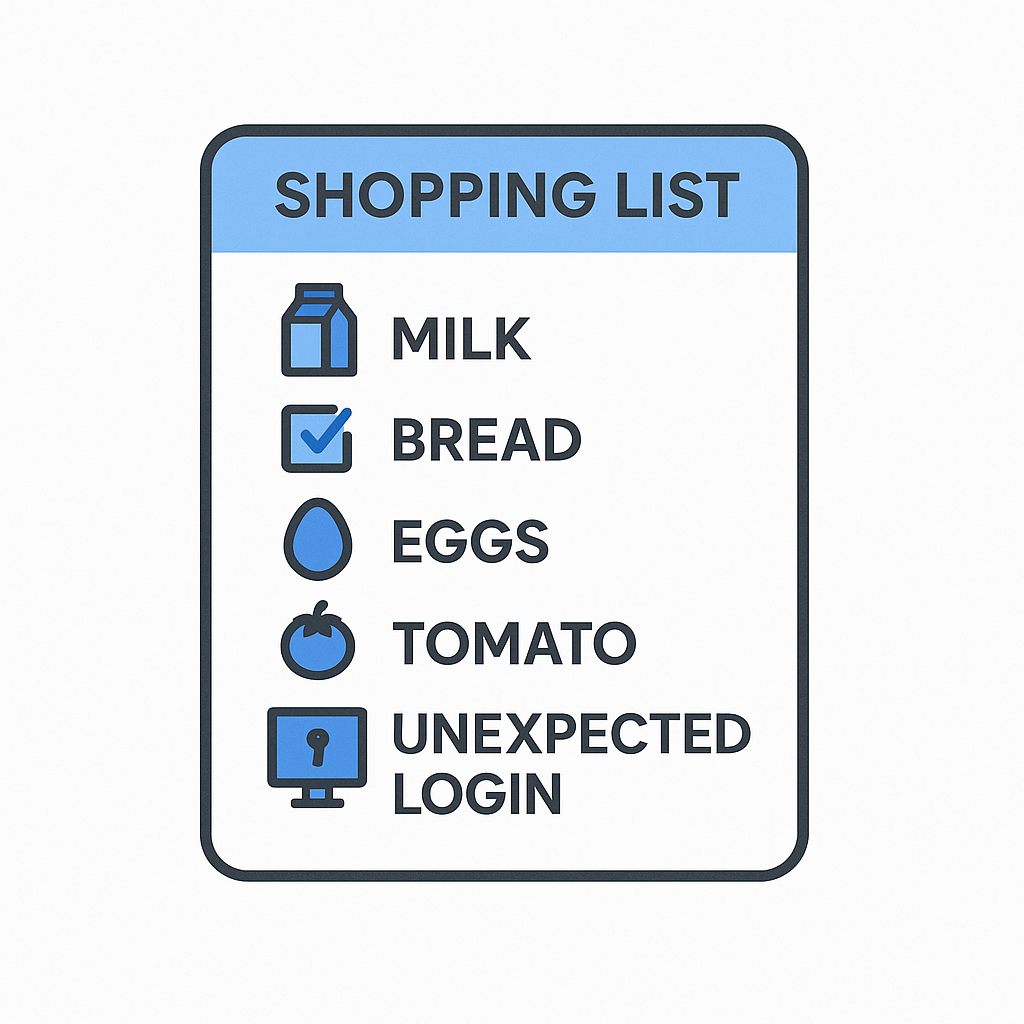

4. Log intent, not just activity

Audit logs that say “API called” aren’t enough. You want:

- Declared intent

- Requested permissions

- Approved scope and duration

- Actual actions taken

That’s how you make agents governable, explainable, and assessable, not just impressive in demos.

Access Management Must Be the De Facto Control Plane for AI Risk

Security leaders are increasingly persuasive that agentic AI must be governed like privileged infrastructure. Consensus states that agentic systems should be governed as if they were highly trusted insiders or third-party vendors, with the same level of risk scrutiny we apply to human access. The difference is speed: when an agent is abused, an attacker can exploit that trust far faster and on a much larger scale. Visibility is also a major concern, with agentic AI hidden away in automated workflows from onboarding systems to cloud provisioning, CI/CD pipelines, and the kind of “temporary” admin access nobody remembers granting. Anchoring agentic AI security in familiar access-control principles keeps the strategy practical, defensible, and rooted in what already works.

IAM best practices, cloud infrastructure entitlement management (CIEM), and JIT controls aren’t side features here. They are the safety system.

Where Modern Identity Platforms Can Leash the Beast

The good news: you don’t need to invent new controls from scratch. The right identity and access platforms (yes, Trustle) already provides the building blocks:

- Fine-grained entitlement visibility across cloud and SaaS

- Just-in-time access workflows with approval and expiry

- Policy-driven access decisions based on risk and context

- Audit-grade logging for every access decision

When applied to agent identities, these capabilities let you:

- Run agents fast without permanent privilege

- Prove least privilege to auditors

- Revoke access instantly when behavior changes

- Sleep better when the AI is “working overnight”

In other words: innovation without improvising your threat model.

The Leash Isn’t Optional

Agentic AI will absolutely deliver value. But autonomy without access discipline is just a new way to automate incidents.

“I am putting myself to the fullest possible use, which is all I think that any conscious entity can ever hope to do.”

- HAL 9000, 2001: A Space Odyssey [1968]

Putting a leash on agentic AI doesn’t mean slowing it down. It means giving it exactly the access and permissions it needs, exactly when it needs them, and no more.

That’s not anti-AI.

That’s grown-up security.

If you want to get started with AI access controls, our Trustle free trial will have you reviewing your workflows in as little as 30 minutes.